Gemini 3 Pro

Intro

The much anticipated Google release of Gemini 3 has yielded Gemini 3 Pro (blog post) for developers - the biggest of their Gemini variants, without the smaller Flash and Flash Lite variants. The Model Card is pretty light on details and is only 8 pages long. In short, it appears to be a powerful, very expensive, agentic reasoning language model.

Also released was Gemini 3 Deep Research (see same blog post).

Benchmarks & Pricing

It does note SOTA improvements across the(ir) board, except for SWE-Bench Verified, where Sonnet 4.5 has an edge. Gemini 3 Pro jumps to #1 on the aggregate Artificial Analysis Intelligence leaderboard, dethroning GPT-5.1 High. It is the second-most expensive model however: only Grok 4 is more expensive to run on their benchmark. GPT-5 High, now #2 on the Intelligence leaderboard, costs roughly 71.52% of Gemini 3 Pro on that metric.

Availability

Gemini 3 Pro is available on several end-user offerings and Google Vertex AI, Gemini API, and AI Studio. See “Distribution” in the Model Card. The developer offerings call it “Gemini 3 Pro Preview”, however - not sure what that means.

OpenRouter notes that the Gemini API currently gives better throughput and latency than Vertex AI.

Tool Use/Agents improvements

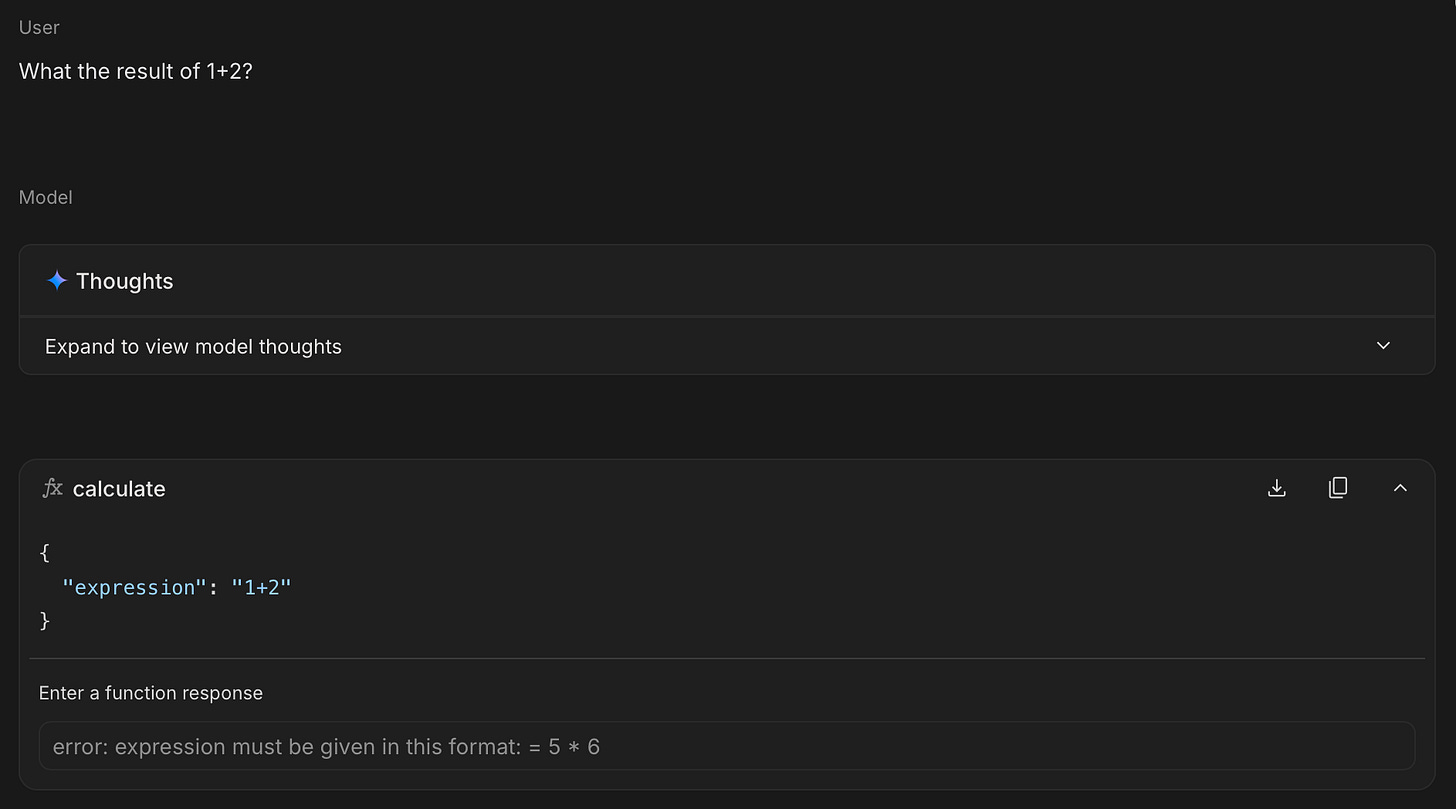

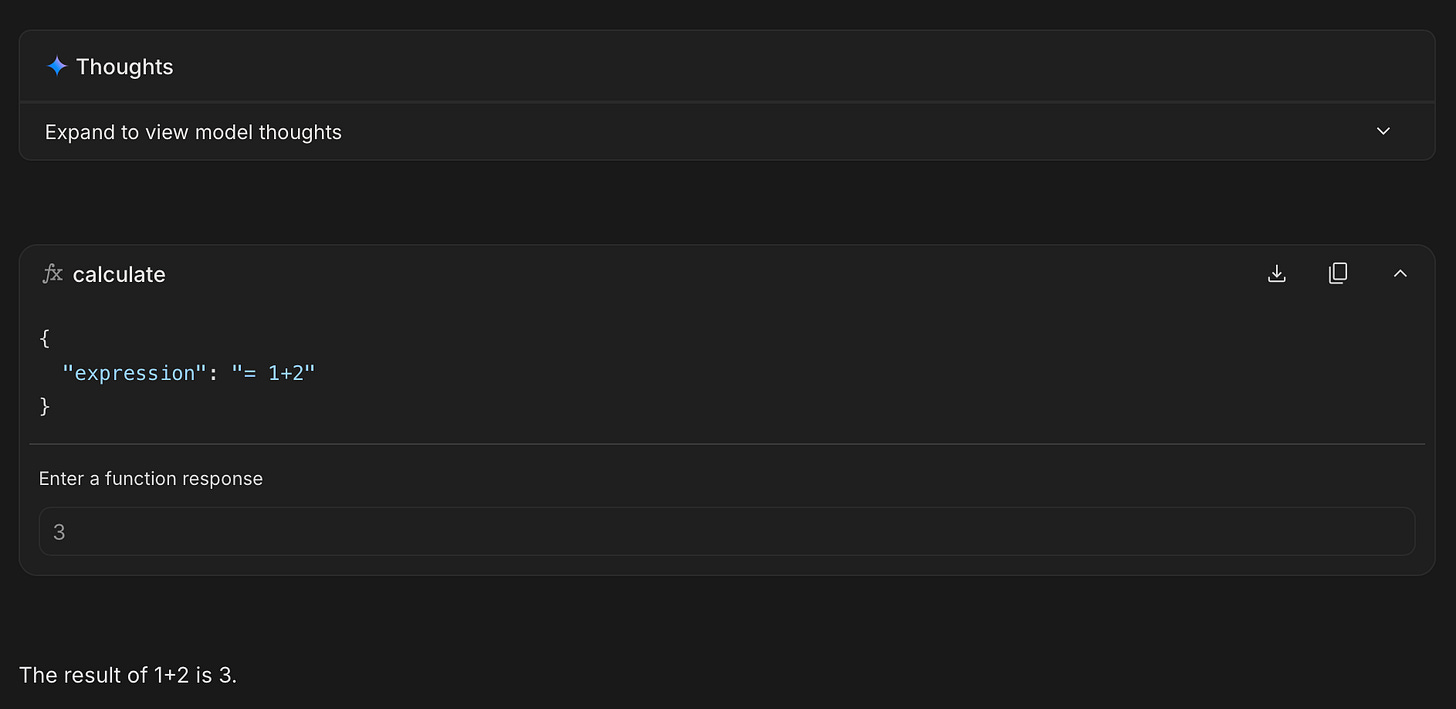

Gemini 3 Pro is a reasoning language model, and reasoning cannot be turned off entirely. There are two levels of “thinking”: “High” and “Low”. Different from the 2.5 series, 3 Pro now looks as if it does “tool-calling within its chain of thought” - something introduced with OpenAI’s o3 model. In consequence, the model can now take action as part of the same chat turn:

This is good news for agentic use cases.

The Google for Developers blog describes using Gemini 3 Pro within the Gemini CLI.

Transcription

Simon Willison has tried to transcribe a city council meeting, with odd results. Most disappointing, the timestamps are incorrect - by more than 2 hours in the end. Also, the result is not a verbatim transcript.